Cashierless Store

Creating a seamless and secure environment where customers could browse, select products, and leave without traditional checkout processes.

This project aimed to develop a fully automated, cashier-free retail experience for the Ministry of Digital Development of the Republic of Tatarstan, Russia.

Our mission was to create a seamless and secure environment where customers could browse, select products, and leave without traditional checkout processes.

Customer Identification

For secure entry, customers registered via a kiosk system combining biometric authentication and RFID-enabled access cards.

The facial recognition pipeline leveraged ArcFace, a state-of-the-art model that uses additive angular margin loss to enhance feature discriminability.

We integrated live anti-spoofing checks using vision transformer architectures to detect presentation attacks. For edge deployment, we quantized the model using TensorRT and optimized inference latency to under 80ms per frame on NVIDIA Jetson devices.

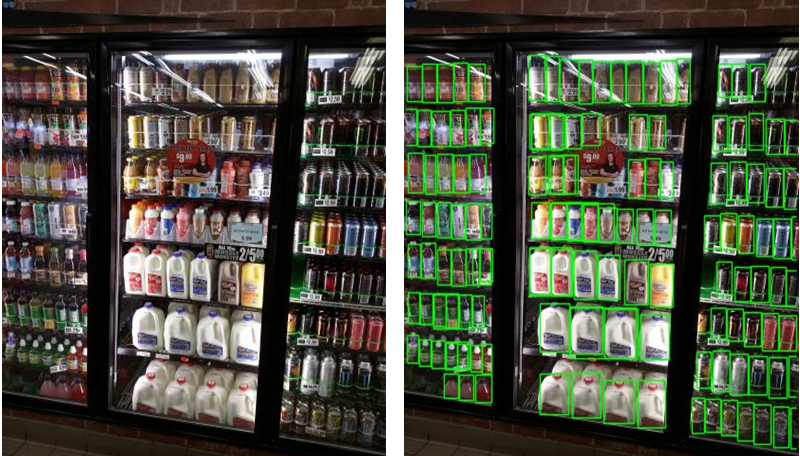

Camera System and Calibration

The store deployed a hybrid camera system combining stereo depth sensors (Intel RealSense D455) and 4K PTZ cameras for wide-area coverage.

Calibration used a combination of traditional checkerboard patterns for intrinsic parameters and deep learning-based methods for dynamic distortion correction. Overhead fisheye cameras were processed using distortion-invariant object detection models to mitigate occlusion challenges in crowded areas.

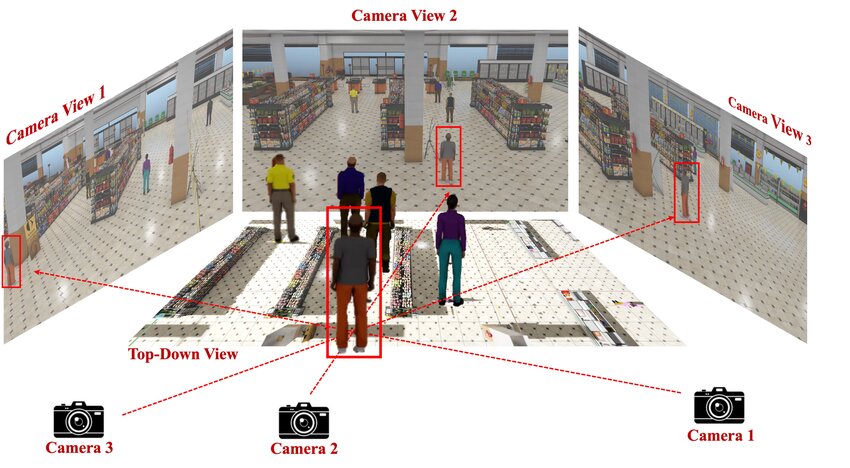

Virtual Mapping and Tracking

Customer tracking combined DeepSORT for robust association of detections across frames and Kalman filters for motion prediction.

To handle cross-camera re-identification, we trained a transformer-based model (TransReID) on synthetic data. Real-time tracking latency was maintained at <200ms using ONNX Runtime with CUDA acceleration.

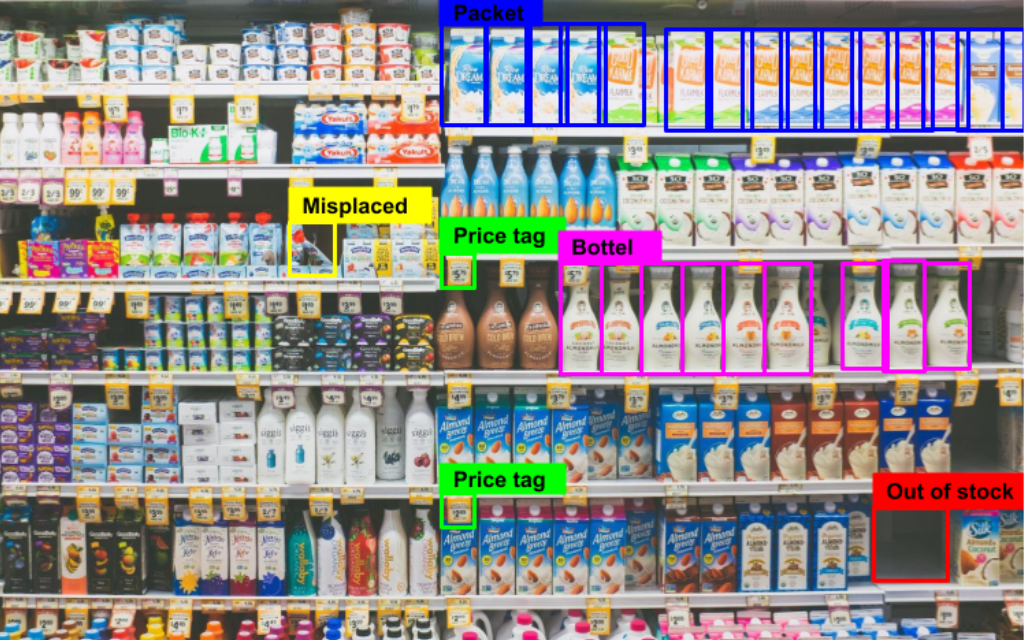

Object Detection and Recognition

Product recognition used a cascaded approach: YOLOv8 for real-time item localization followed by DETR-ResNet50 for fine-grained classification.

To distinguish visually similar products, we incorporated metric learning with triplet loss, trained on a dataset of 5000 SKUs. For edge cases like occluded items, we fused 2D visual data with RFID spatial signals from smart shelves.

Action Recognition

To detect product interactions, we deployed a temporal model combining SlowFast Networks for multi-speed feature extraction and MediaPipe Hands for precise hand localization.

The system achieved 89.4% F1-score on the EPIC-KITCHENS benchmark, validated against real-world shopping scenarios.

System Integration

The architecture used microservices for modular scalability, with gRPC ensuring low-latency communication between components.

Camera streams were processed through an optimized video analytics pipeline using NVIDIA DeepStream. The final system supported 150 concurrent users with end-to-end latency under 1.2 seconds.